Texts, ranging from well-known fiction to archival records, court rulings, and social media exchanges, offer unique opportunities for research and data analysis across various fields. However, they also present significant challenges.

Verbal information, known as unstructured data, lacks the organized format of numerical data, which makes it difficult to fit into predefined models. This does not mean that analyzing text data is impossible, but it does require some extra pre-processing steps.

What is Tokenization in NLP?

Tokenization is a key initial step in the pre-processing of unstructured text data in Natural Language Processing (NLP). It involves breaking down text into smaller components called tokens. This step is vital for introducing structure to unstructured data, allowing it to be organized, modeled, and analyzed.

Tokens can range from full sentences to individual words, numbers, or dates, depending on the analytical needs. Tokenization typically uses spaces or punctuation to determine where one token ends and another begins. For instance, the sentence:

“I love rock music: it’s fun.”

would be tokenized as:

[‘I’, ‘love’, ‘rock’, ‘music’, ‘:’, ‘it’, “‘”, ‘s’, ‘fun’, ‘.’]

Tokenization comes in various types, such as word, character, or sub-word tokenization. It often involves identifying and removing stop words—common words like “the,” “an,” or “in” that do not contribute significantly to the analysis. You can either use predefined lists of stop words for a particular language or create a custom list to suit specific requirements.

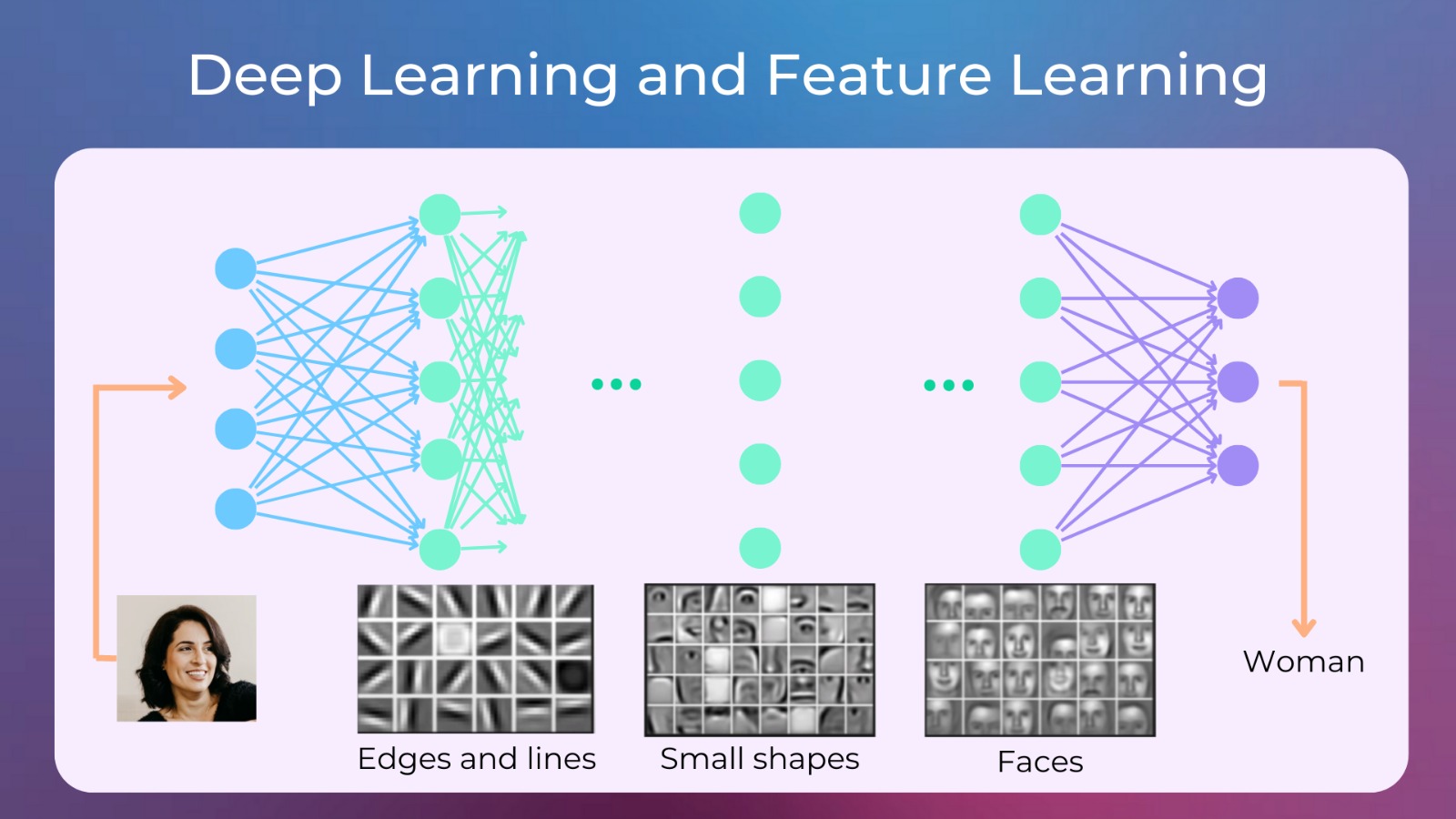

For effective text analysis, understanding individual tokens is not enough; you also need to consider their relationships and the larger structures they form. For example, the word “slate” could be a noun in the phrase “blank slate” or a verb in “We decided to slate the meeting for next month.” Thus, the context provided by surrounding tokens is crucial for more advanced text processing tasks, like sentiment analysis or topic modeling.

Token Classification

While humans naturally use context to understand complex meanings, machines require more guidance.

After text is divided into tokens, these tokens are categorized and labeled according to their characteristics, such as their part of speech or associated sentiment. Named Entity Recognition (NER) systems can identify tokens related to specific entities based on word combinations or context. For example, in a business context, “Apple” might be recognized as a company, and “Steve Jobs” would be identified as a single entity representing a person’s name.

Large Language Models (LLMs) like ChatGPT rely on tokenization and vast text datasets to learn the statistical relationships between tokens. Essentially, they learn to identify patterns by predicting the likelihood of tokens appearing in different contexts.