You’re about to dive into the captivating realm of Natural Language Processing (NLP), where innovative technology is continuously shaping the way we communicate with machines. From powerful language models to cutting-edge speech recognition tools, the progress in NLP is not just confined to academic research; it’s making a significant impact on everyday life. Let’s explore the exciting advancements that are redefining this field.

Recent Breakthroughs in Natural Language Processing (NLP)

Natural Language Processing (NLP) has experienced remarkable growth recently, revolutionizing the way computers comprehend and interact with human language. From improving chatbots to conducting sophisticated linguistic analyses, the advancements in NLP are widespread. This overview will guide you through the major milestones and innovations, providing insights into each development.

Early Developments in NLP

Rule-Based Systems

NLP’s roots lie in rule-based systems that utilized predefined rules to understand and process text, performing tasks like translation and information retrieval. However, these systems were limited by their rigidity and inability to handle the complexities of human language.

Statistical Approaches

During the 1980s and 1990s, a shift towards statistical methods began. Using large datasets, these methods leveraged algorithms to detect patterns in language. Techniques like Hidden Markov Models (HMMs) and n-grams emerged, improving language processing capabilities but still lacking flexibility.

The Rise of Machine Learning in NLP

Machine Learning Techniques

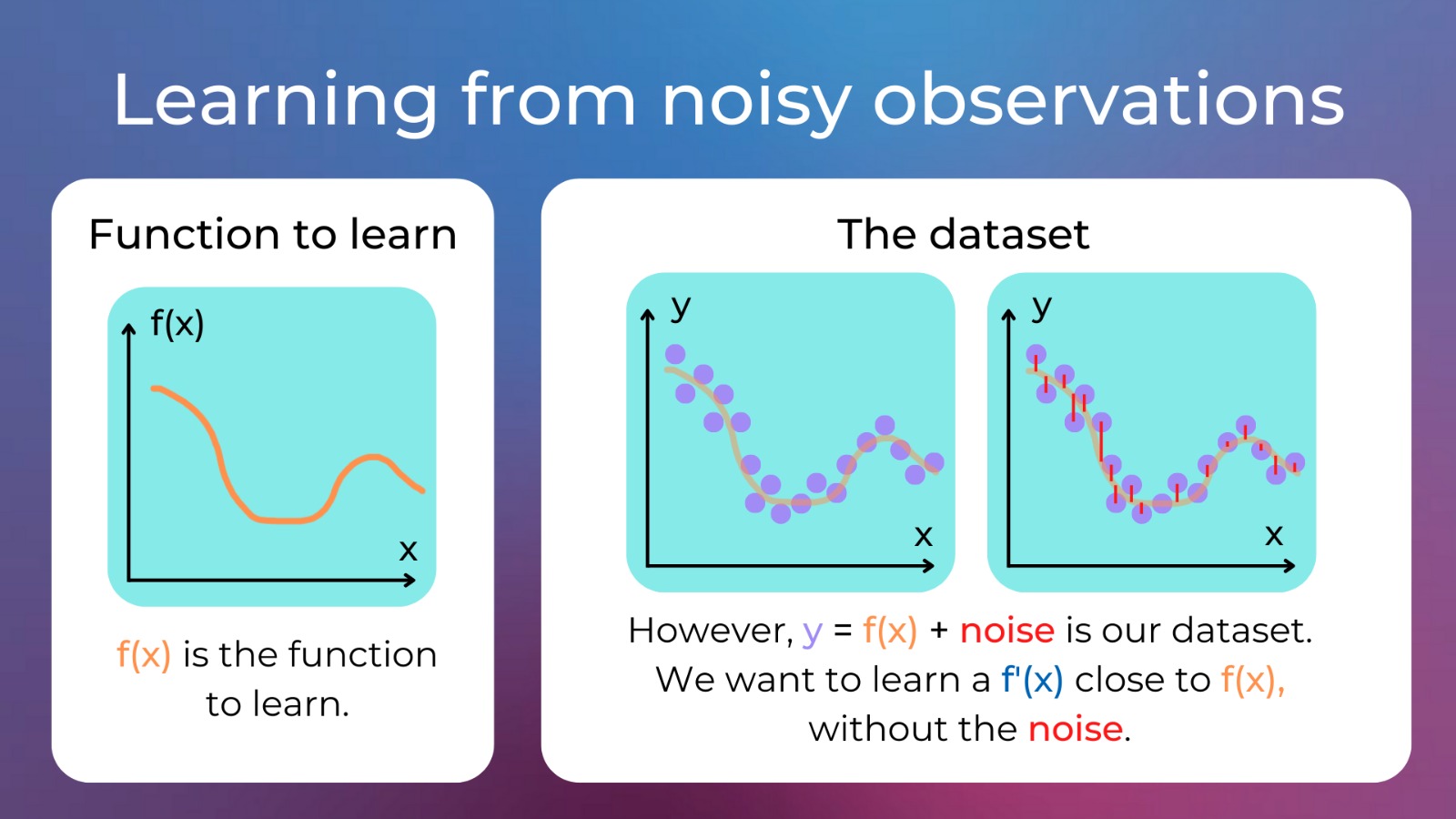

The next significant leap came with the introduction of machine learning, which allowed models to be trained on large volumes of text data, creating more adaptable NLP systems. Supervised learning, where models learn from labeled datasets, became a common method for various tasks, such as sentiment analysis and named entity recognition.

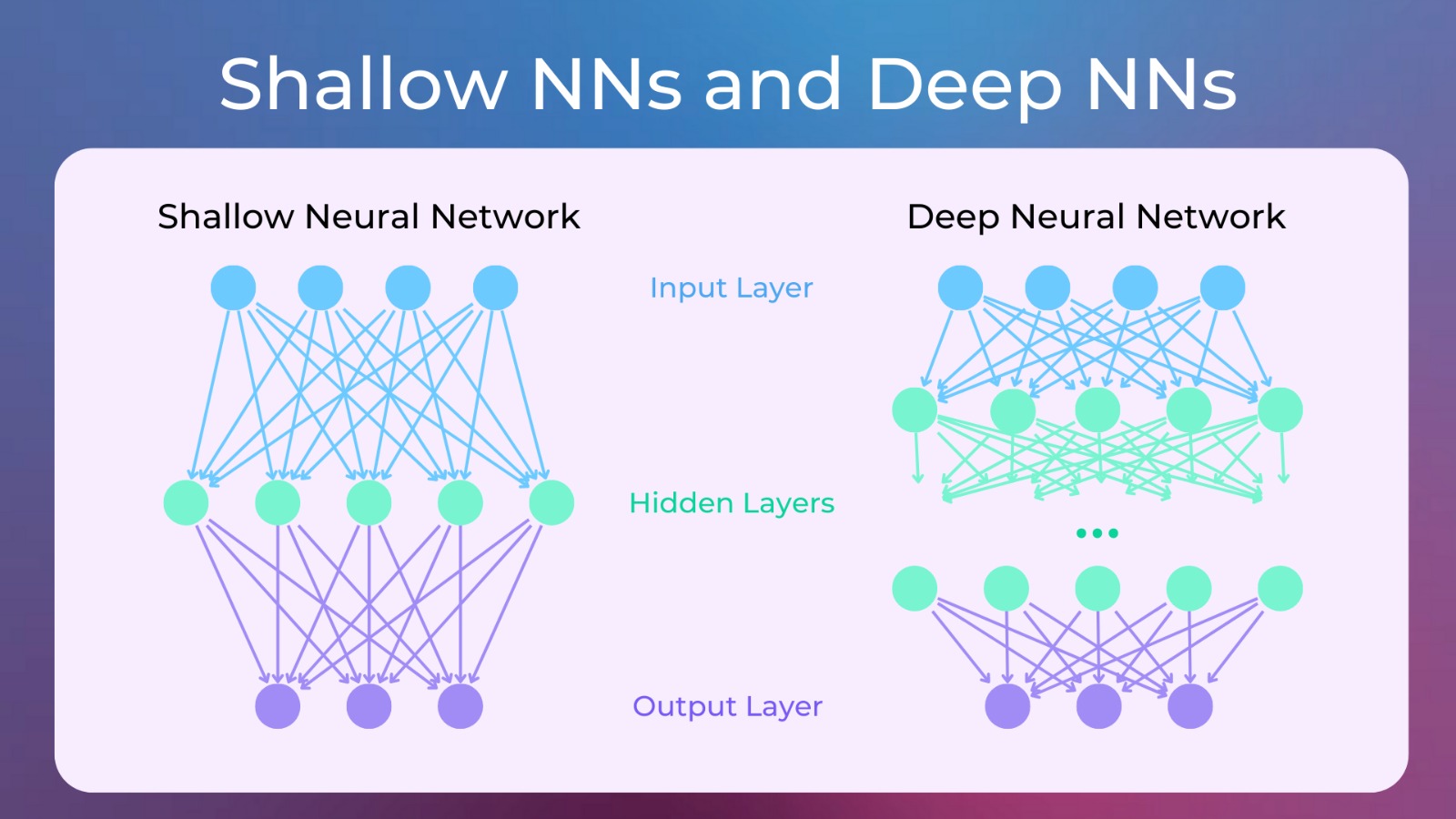

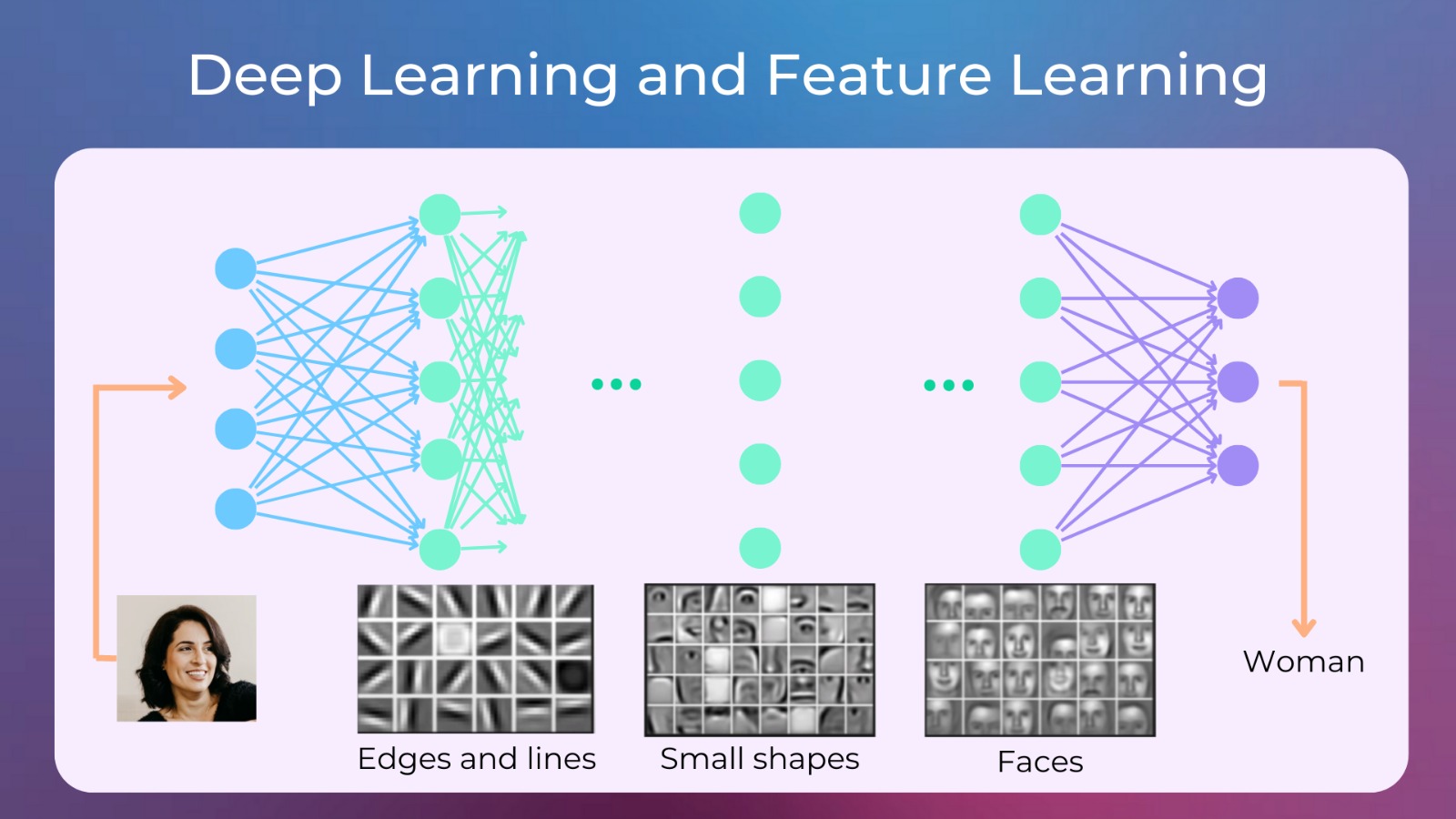

Impact of Deep Learning

Deep learning further transformed NLP with the use of neural networks, including Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs). These models could learn complex patterns and contextual relationships within text, greatly enhancing the accuracy and effectiveness of NLP tasks.

Key Innovations and Technologies in NLP

Word Embeddings

A crucial advancement in NLP was the development of word embeddings, such as Word2Vec and GloVe. These methods created dense vector representations of words, capturing their meanings and relationships, and laid the foundation for more sophisticated language models.

Transformers and Attention Mechanisms

The introduction of transformers and attention mechanisms represented a major shift in NLP. Models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) employed transformers to process text more efficiently and understand context more accurately, leading to state-of-the-art results in various NLP applications.

Pre-Trained Language Models

Pre-trained language models took NLP to another level by training on extensive datasets and then fine-tuning for specific tasks. This approach, exemplified by BERT and GPT, achieved exceptional performance while reducing the need for large labeled datasets, making it a standard in the field.

Major Applications of NLP

Chatbots and Virtual Assistants

Advanced NLP models have significantly improved chatbots and virtual assistants, allowing them to understand and respond to user queries more naturally and accurately, revolutionizing customer service by making it more efficient and user-friendly.

Sentiment Analysis

NLP advancements have greatly enhanced sentiment analysis, enabling businesses to assess customer opinions through various textual data sources like reviews and social media, thus supporting data-driven decisions and improving services.

Machine Translation

Machine translation has made tremendous progress with NLP models, such as those used by Google Translate. These systems now provide more accurate and fluent translations, bridging communication gaps across languages.

Text Summarization

Automated text summarization has become more advanced, allowing for the concise and coherent summarization of large documents, which is valuable in fields such as journalism, research, and content creation.

Transfer Learning and Its Impact on NLP

Role of Transfer Learning

Transfer learning has revolutionized NLP by allowing pre-trained models to be adapted for specific tasks with minimal additional training data. This method has become a cornerstone in the development of versatile NLP solutions.

Benefits of Pre-training and Fine-Tuning

- Reduced Need for Labeled Data: Less reliance on large amounts of labeled data.

- Improved Performance: Enhanced model accuracy by transferring generalized knowledge.

- Accelerated Development: Faster deployment of NLP applications.

Ethical Considerations in NLP

Addressing Bias and Fairness

Despite the advancements, NLP models face challenges such as bias. Since these models learn from existing data, they can unintentionally reinforce biases present in their training datasets. Researchers are actively working to address these fairness issues.

Privacy Concerns

The need for large amounts of data in NLP also raises privacy and security concerns. Balancing data usage with privacy protection is a key challenge for responsible NLP development.

Promoting Responsible AI

Ensuring responsible AI practices, such as transparency, accountability, and ethical usage, is essential as NLP evolves. These principles help build technology that benefits society while minimizing risks.

Future Directions in NLP

Multimodal NLP

The future of NLP involves multimodal systems that integrate text with other data types like images and audio, leading to richer and more comprehensive AI systems.

Zero-Shot and Few-Shot Learning

These emerging techniques enable models to perform tasks with little or no task-specific data, opening new possibilities for NLP applications with minimal training.

Continual Learning

Continual learning focuses on developing models that can learn from new data without forgetting previous knowledge, making them adaptable to changing language and contexts.

Enhancing Human-AI Collaboration

Future research aims to improve human-AI collaboration by developing more intuitive interfaces, better interpretability, and adhering to ethical guidelines, enhancing the synergy between humans and machines.

Looking Ahead

NLP has made remarkable progress, but there’s much more to come. As we continue to explore new frontiers, the potential for NLP to transform human-technology interaction is vast. From smarter virtual assistants to advanced linguistic tools, the future of NLP promises even more groundbreaking developments.